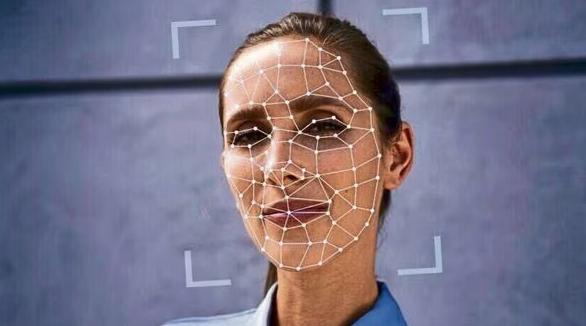

The advent of artificial intelligence (AI) has brought about significant changes in various sectors, including the business world. One notable application of AI is deepfakes, which have become an increasingly growing concern for businesses, particularly those adopting remote and hybrid work models. Deepfakes refer to manipulated audio or video content that resembles authentic media, created using advanced machine learning techniques. This article explores why AI deepfake attacks pose an emerging threat in the current business landscape.

Proliferation of remote and hybrid work arrangements

The COVID-19 pandemic accelerated the shift towards remote and flexible work environments. With employees working from various locations, verifying identities and ensuring secure communication become more challenging. Deepfake attacks can exploit these vulnerabilities by impersonating authorized personnel or clients, leading to unauthorized access, data breaches, and financial losses.

Difficulty in detecting deepfakes

As AI technology advances, deepfakes have become increasingly sophisticated, making it difficult for humans to distinguish them from genuine content. According to a study by the University of California, Berkeley, even experts find it challenging to identify manipulated audio and video content with 100% accuracy. This difficulty in detection allows deepfake attacks to go unnoticed, enabling perpetrators to carry out malicious activities for extended periods.

Increased attack surface

In a remote or hybrid work setting, businesses rely on various digital tools and platforms to facilitate communication and collaboration. Each of these systems presents an opportunity for cybercriminals to launch deepfake attacks. For instance, they can manipulate video conferencing feeds, create fake emails or messages, or even impersonate a colleague during a voice call. The expanded attack surface makes it harder for businesses to protect their assets and maintain secure operations.

Potential reputational damage

Deepfake attacks often involve spreading misinformation or defamatory content, which can significantly impact a company’s reputation. For instance, an attacker could create a deepfake video showing a high-ranking executive engaging in unethical behavior, causing significant brand erosion and potentially leading to customer distrust and financial losses.

Lack of legal frameworks

Currently, there is a lack of robust legal frameworks addressing the issue of deepfakes, making it difficult for businesses to hold perpetrators accountable. As a result, cybercriminals are more likely to exploit this gap and carry out deepfake attacks with minimal fear of repercussions.

Controlling the risks

AI deepfake attacks pose a significant threat to the increasingly remote and hybrid work-driven business world. The challenges in detecting deepfakes, the expanded attack surface, potential reputational damage, and lack of legal frameworks contribute to this growing concern.

To mitigate these risks, businesses must invest in advanced security measures, such as AI-powered deepfake detection tools, employee training programs, and robust cybersecurity policies. By taking a proactive approach to addressing deepfake threats, companies can protect their assets, maintain secure operations, and preserve their reputation in an ever-evolving digital landscape.

If your business needs help with its security, reach out to our team of friendly professionals. We can help.